If you are following my progress in the Data Science Learning Club you might know that I am using NFL data for the tasks. For predicting sports events I think it is not only important to have statistics about the players, teams and previous games but also about the weather. From when I was a soccer player I can tell you that it makes quite a difference whether it is snowing, has 30°C or more or the weather is moderate. One could argue that the weather is influence both teams and therefore no one has an advantage, but I think that everyone responds differently to different conditions.

Contents

The data source

After only searching for a short time, I found a website called NFLWeather which provides weather forecasts for every match back to 2009.

Web scraping: rvest

I have been looking into web scraping before, but it seemed like a dirty and cumbersome task to me.

Since I made the experience that almost everything related to data has been implemented in a nice way by someone in R I wanted to give it another try. I found the package rvest by @hadleywickham, which is always a very good sign with respect to R package quality.

The code

Checking out their archive I found the structure of their links and that they go back until 2009. So I wrote this method to parse the page, find the first table (there is only one), and convert it to a data.frame:

load_weather<-function(year, week) {

base_url<-"http://nflweather.com/week/"

if (year == 2010) { # necessary because of different file naming

start_url<-paste0(base_url, year, "/", week, "-2/")

} else {

start_url<-paste0(base_url, year, "/", week, "/")

}

if (year == 2013 && week == "pro-bowl") {

return (NULL)

}

tryCatch ({

page<-html(start_url, encoding="ISO-8859-1")

table<-page %>% html_nodes("table") %>% .[[1]] %>% html_table()

table<-cbind("Year"=year, "Week"=week, table[,c("Away", "Home", "Forecast", "Extended Forecast", "Wind")])

return(table)

},

error = function(e) {

print(paste(e, "Year", y, "Week", w))

return(NULL)

}).

}

The function got a lot longer than anticipated, but let me explain it:

- Parameters: year and week

- start_url is built from the base_url that's always the same and the two parameters. The only difference is for year 2010, where for no apparent reason "-2" is added to each link.

- We have to skip the pro-bowl week in 2013, because that page does not exist.

- Then we have some error handling because other pages might not exist or might become unavailable.

- Line 13: I parse the page (actually html is deprecated and read_html should be used but I currently have an older version of R running).

- Line 14: I use the magrittr pipe operator as used in the package examples, but this can also be done without it. Just see the code below.

- Line 15: I create a data.frame only selecting the columns I need and by adding the Year and Week information to each row.

html_table(html_nodes(page, "table")[[1]])

This is how I call the code to build one large data.frame:

weather_data<-data.frame("Year"=integer(0), "Week"=character(0), "Away"=character(0), "Home"=character(0), "Forecast"=character(0), "Extended Forecast"=character(0), "Wind"=character(0))

for (y in years) {

for (w in weeks) {

weather_data<-rbind(weather_data, load_weather(y, w))

}

}

The output

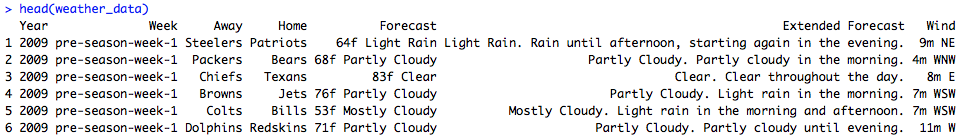

The output is a data.frame with 2832 rows just like the ones in the screenshot.

Download complete code

The complete source can be downloaded below.

library(rvest)

years<-2009:2015

weeks<-c(paste0("pre-season-week-", 1:4), paste0("week-", 1:17), "wildcard-weekend", "divisional-playoffs", "conf-championships", "pro-bowl", "superbowl")

load_weather<-function(year, week) {

base_url<-"http://nflweather.com/week/"

if (year == 2010) { # necessary because of different file naming

start_url<-paste0(base_url, year, "/", week, "-2/")

} else {

start_url<-paste0(base_url, year, "/", week, "/")

}

if (year == 2013 && week == "pro-bowl") {

return (NULL)

}

tryCatch ({

page<-html(start_url, encoding="ISO-8859-1")

table<-page %>% html_nodes("table") %>% .[[1]] %>% html_table()

table<-cbind("Year"=year, "Week"=week, table[,c("Away", "Home", "Forecast", "Extended Forecast", "Wind")])

return(table)

},

error = function(e) {

print(paste(e, "Year", y, "Week", w))

return(NULL)

})

}

weather_data<-data.frame("Year"=integer(0), "Week"=character(0), "Away"=character(0), "Home"=character(0), "Forecast"=character(0), "Extended Forecast"=character(0), "Wind"=character(0))

for (y in years) {

for (w in weeks) {

weather_data<-rbind(weather_data, load_weather(y, w))

}

}

#### code without pipe ####

html_table(html_nodes(page, "table")[[1]])